为什么选择我们

特色技术视频

工业电子和伺服电机维修 - 液压和电动机重建设施

达拉斯/英尺维修设施 - 组件级欧宝娱乐app官方登录维修 - 电子/电动机/液压/气动学

工业电子,电机,伺服电机,液压和气动组件水平维修

伺服电机维修和测试程序 - 全球电子服务欧宝体育官网

电动机维修和重建说明 - 完整维修过程

解释定向阀维修 - 完全拆卸和重新组装

全球电子服务地点欧宝体育官网

全球电子服务为大多数制造商以及新的,欧宝体育官网翻新和过时的设备提供真正的内部综合维修服务和解决方案。张信哲欧宝娱乐下载我们有1-5天的标准周转时间和24-48小时的免费服务,并进行真正的负载测试,完整的功能测试以及每次维修的18个月服务保修。

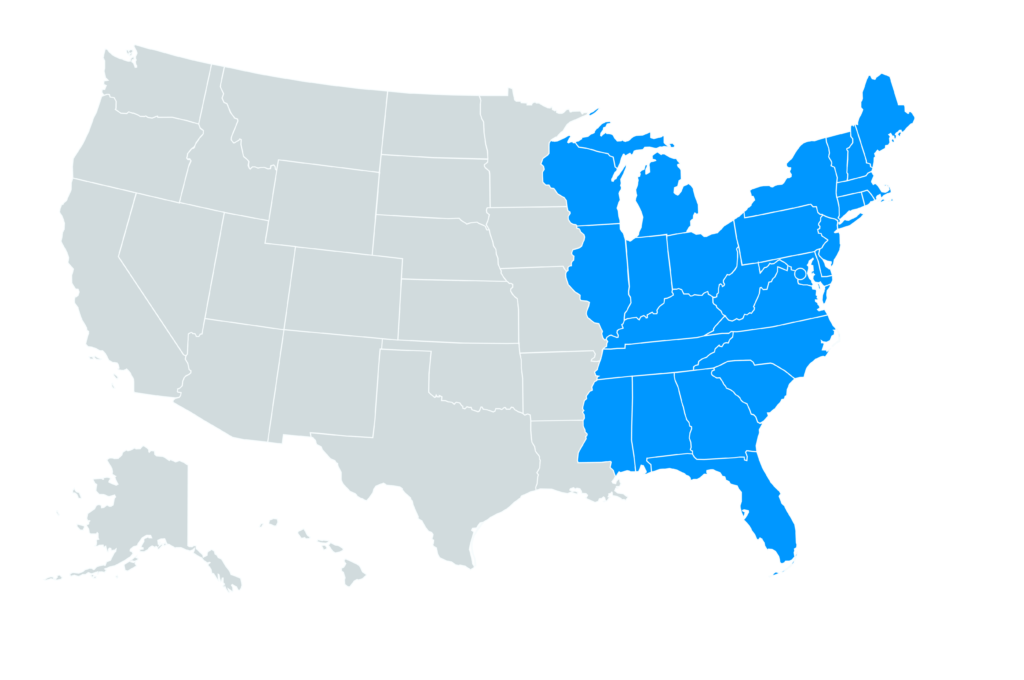

东海岸维修设施(公司位欧宝娱乐app官方登录置)

在佐治亚州,阿拉巴马州,南卡罗来纳州,北卡罗来纳州和田纳西州的免费接送和送货。

5325 Palmero Court

Buford,GA 30518

Buford,GA 30518

- 当地的:

- 770-965-9294

- 传真:

- 770-965-1314

- 电子邮件:

- sales@www.fraserlikely.com

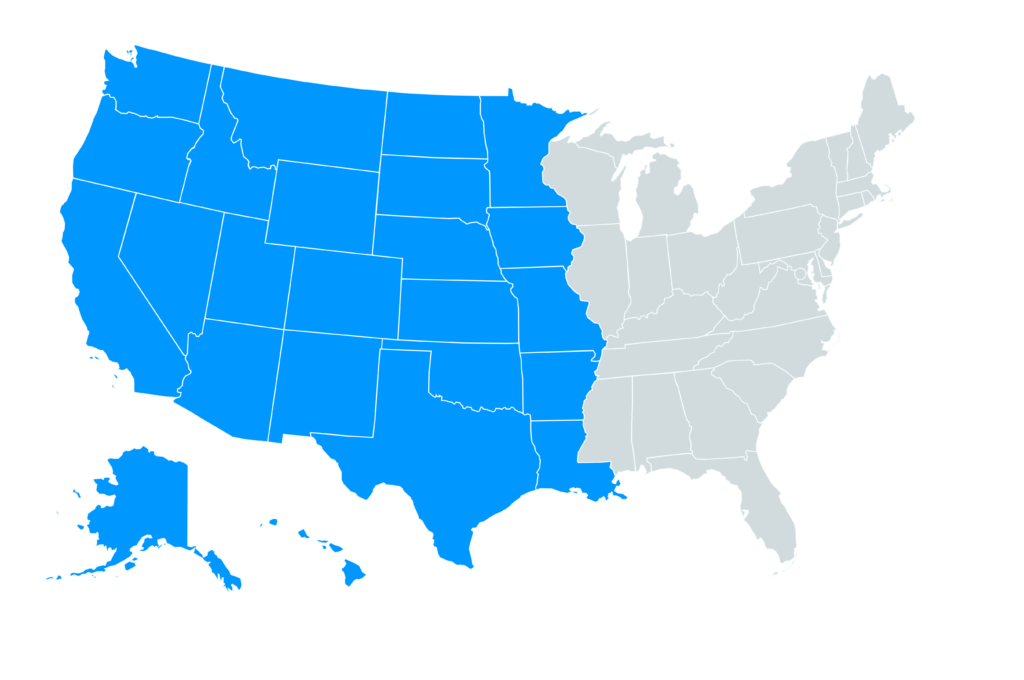

欧宝体育app在线下载西海岸维修设施欧宝娱乐app官方登录

在德克萨斯州,阿肯色州,堪萨斯州,路易斯安那州,新墨西哥州和俄克拉荷马州的免费接送和送货。

1010 Pamela Drive

Euless,TX 76040

Euless,TX 76040

- 当地的:

- 817-545-2911

- 传真:

- 817-545-2991

- 电子邮件:

- sales@www.fraserlikely.com